7个Python EDA技巧,帮助识别和修复数据问题

KDnuggets

·

如何在Python中使用工厂模式 - 实用指南

freeCodeCamp.org

·

Python 潮流周刊#139:为什么人们总想取代数据分析师?

豌豆花下猫 | Python猫

·

在Python中处理十亿行数据集(使用Vaex)

KDnuggets

·

Python 3.13 和 3.14 现已可用

Vercel News

·

Daggr:作为可检查AI工作流的开源Python库的介绍

InfoQ

·

Python 潮流周刊#138:Python 正在被渐进式改进扼杀?

豌豆花下猫 | Python猫

·

在Python项目中管理秘密和API密钥(.env指南)

KDnuggets

·

如何在Python中使用构建者模式 - 开发者实用指南

freeCodeCamp.org

·

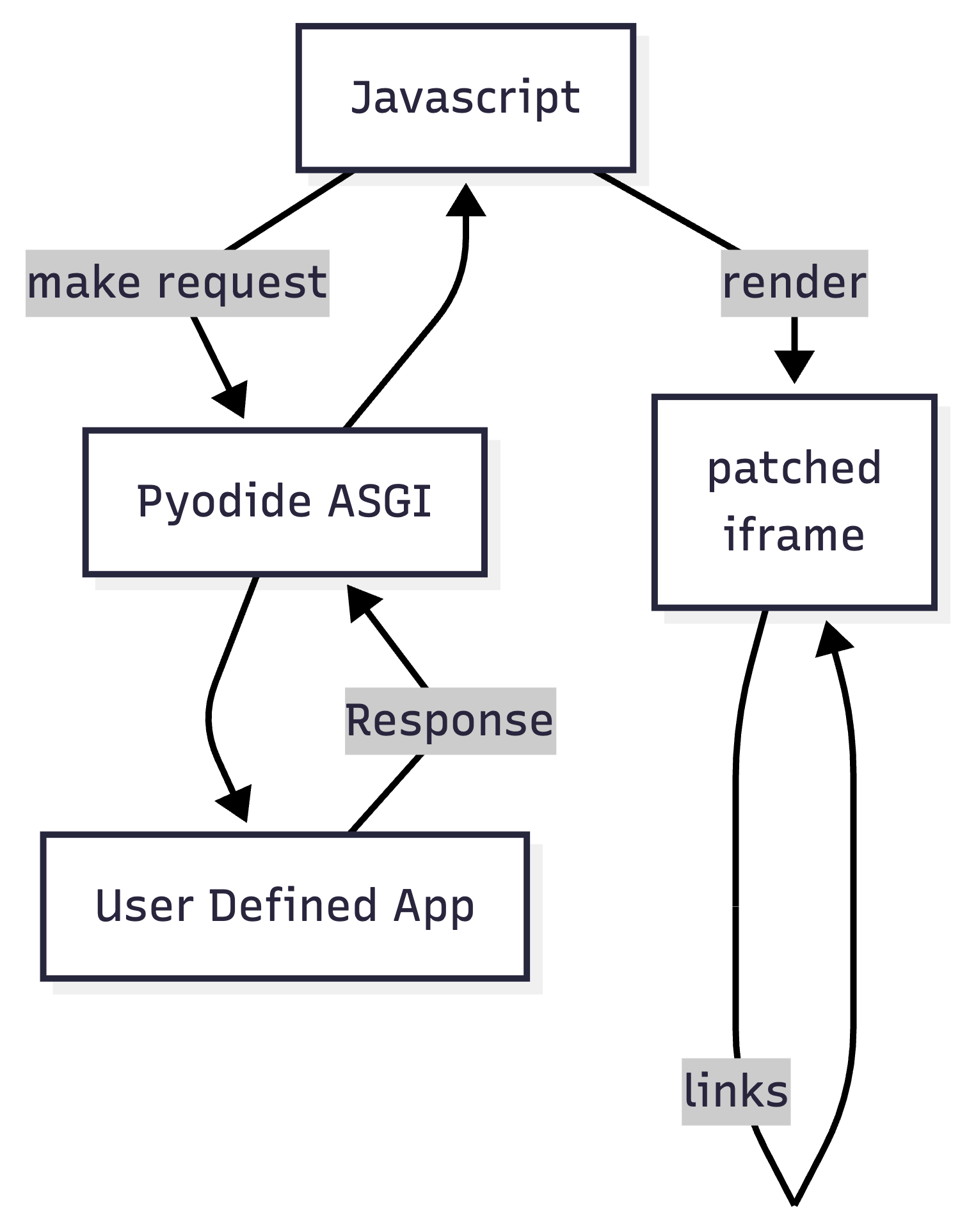

Webasgi - 在浏览器中运行Python网络应用

Jamie's Blog

·

分布式应用平台Aspire支持JavaScript和Python

The New Stack

·