停止错误尝试!Midjourney Video“见光死”的根源被揭开,它并非Sora的竞品,而是顶级的动图神器,我们将一步步教你如何用它称霸小红书。

啊啊啊啊啊啊啊!家人们!我今天必须吹爆Midjourney Video!这个神器真的让我破防了!5秒钟就能生成超惊艳的动图,氛围感、细节感直接拉满,简直是打开新世界的大门!以前我以为它“见光死”是没啥用,现在才发现是我们用错了方法!它压根不是做视频的,它是动图界的王者啊!必须冲,必须玩! 先说结论,Midjourney...

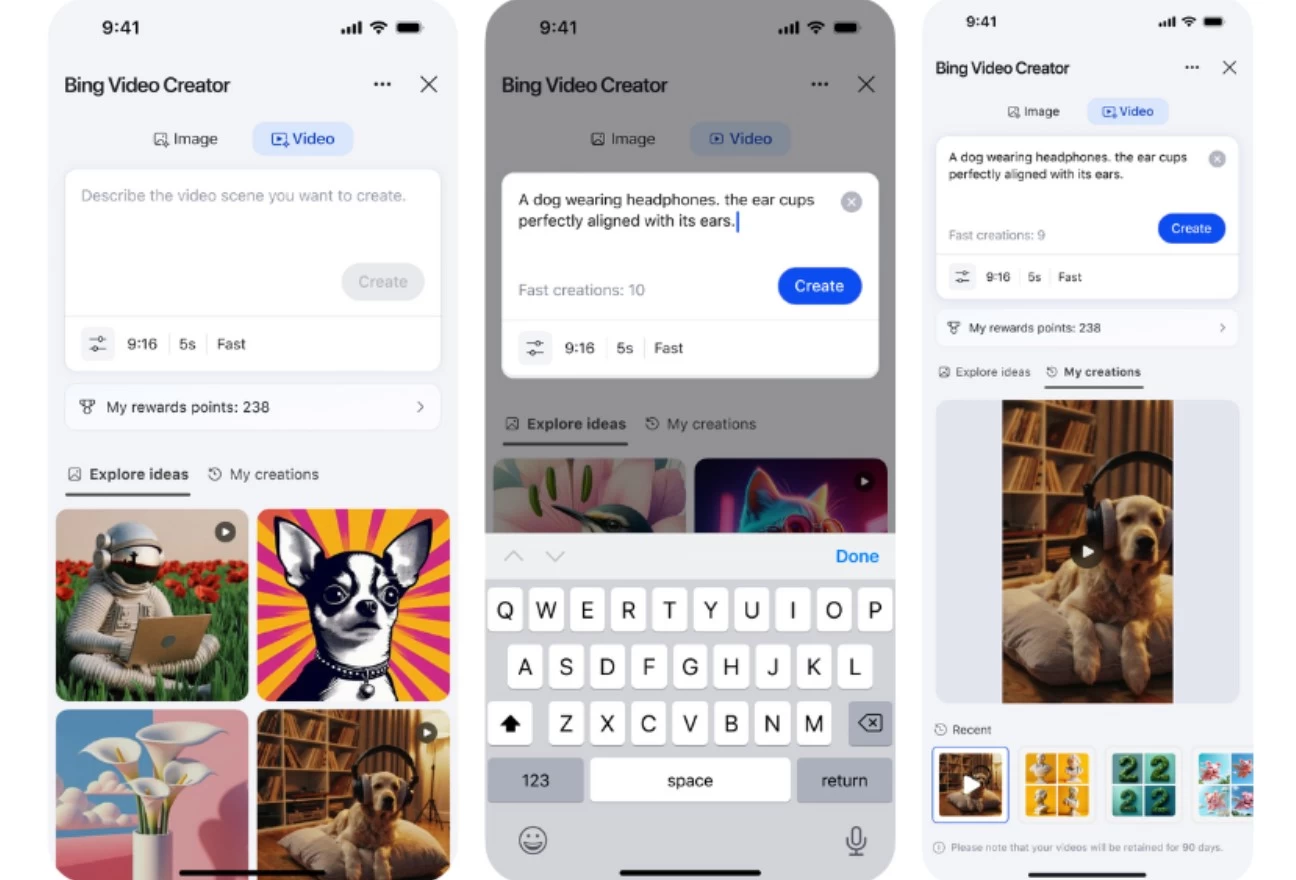

Midjourney Video是一款引人注目的AI视频生成工具,但因操作复杂而未能广泛应用。尽管生成的视频效果出色,但实用性不足,用户难以拼接成完整故事。建议将其视为动图,转换为Live Photo分享,以提升传播效果。