将NVIDIA TensorRT-LLM集成到Databricks推理堆栈中

Over the past six months, we've been working with NVIDIA to get the most out of their new TensorRT-LLM library. TensorRT-LLM provides an easy-to-use Python interface to integrate with a web server...

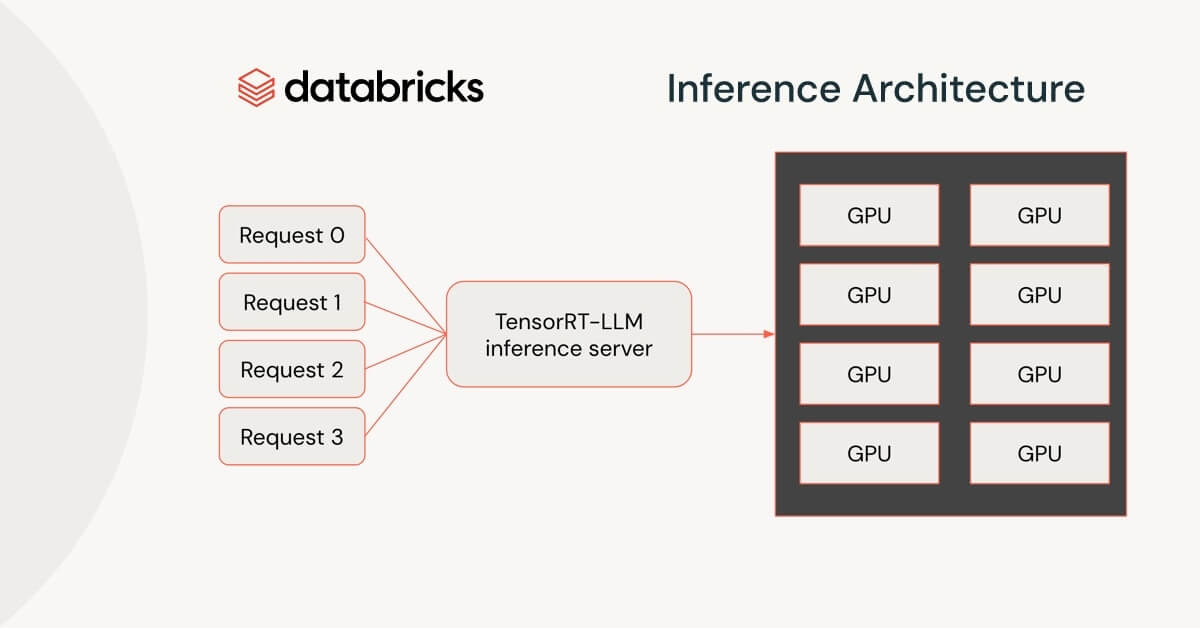

Databricks Mosaic R&D团队在7个月前推出了推理服务架构的第一个版本。2024年1月,他们将开始使用基于NVIDIA TensorRT-LLM构建的新推理引擎来提供大型语言模型(LLM)的服务。TensorRT-LLM是用于最先进的LLM推理的开源库,与NVIDIA的TensorRT深度学习编译器集成,优化内核用于关键操作,通信原语用于高效多GPU服务。他们与NVIDIA的合作使得从Hugging Face或使用MPT架构的自己的预训练或微调模型进行服务更快更容易。